Checking URL presence on Google, Bing, Yahoo! and Yandex

Published on December 17, 2017by BotsterWhy is it important to ensure that your documents are appropriately indexed by search engines? How to check URL status online and to catch the links that aren't searchable? Let's say you have a list of a site's URLs and you need to make sure all of them were indexed by Google (or Bing, Yahoo! or maybe even Yandex). How would you do that? Of course, depending on the circumstances, there are several options.

Manual URL checking: simple use-case (1-10 URLs)

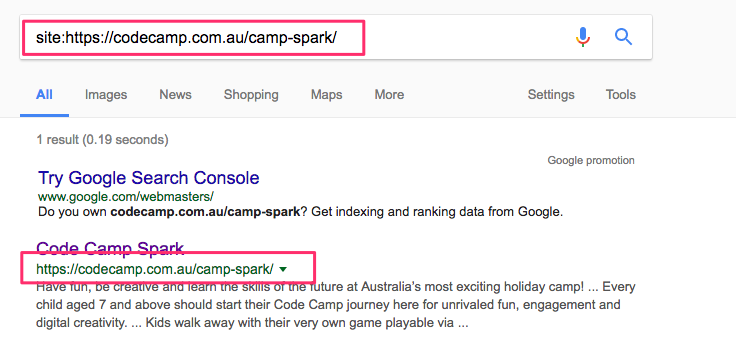

Say, your URL list is relatively small. One or maybe up to ten links. Not a big deal, you can do your checks manually! Go on Google and enter

site:https://codecamp.com.au/camp-spark/

And voila!

You can immediately check if URL is indexed in Google and is searchable by your users. In this case, we're good.

You can immediately check if URL is indexed in Google and is searchable by your users. In this case, we're good.

Automatic URL index checking: advanced use-case (LOTS of URLs!)

The moment you need to check 100 or 1000 URLs this immediately becomes a non-trivial task, especially because an URL will be indexed only if certain conditions are met. Checking hundreds (or even a couple of dozens) of URLs manually is a real pain in the ass, and if you're using some free URL checker, Google is going to ban it really quickly because its algorithms are getting smarter every day. That's exactly the pain I experienced a while ago, doing an SEO report for one of my clients. This made me look for a better solution and I ended up writing a small bulk URL checker tool myself.

Botster to the rescue!

The bot is called the Google Index Checker and does exactly what you'd expect from it - it checks a list of URLs you feed to it against a list of search engines:

- Bing

- Yahoo!

- Yandex

Yes, you can use it for scanning multiple search engines in one go or select just one of them and use it as a Bing checker, for example. And thanks to this URL checker bulk searches have become so fast and simple!

Yes, you can use it for scanning multiple search engines in one go or select just one of them and use it as a Bing checker, for example. And thanks to this URL checker bulk searches have become so fast and simple!

Using this website URL checker is as easy as ABC! Here is what you need to do:

1. Sign up on Botster.

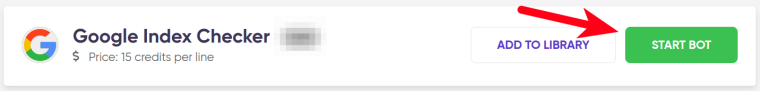

2. Go to the bulk Google index checker tool's start page.

To open this page, click on the green button on your right.

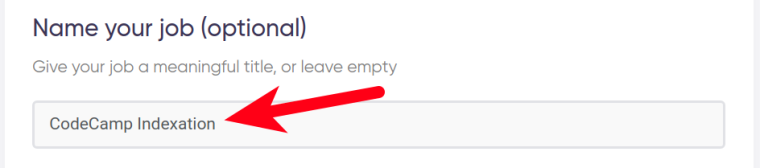

3. Name your job.

3. Name your job.

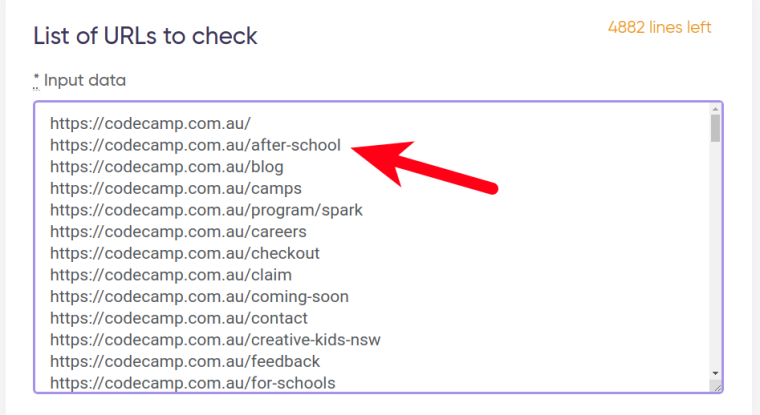

I'm going to check the indexation of an Australian website called CodeCamp, so I've named my job "CodeCamp Indexation". 4. Paste your URLs into a special field.

4. Paste your URLs into a special field.

The bulk index checker accepts up to 5,000 URLs in one go. You can launch as many sessions as you wish:

(Wondering where I found a list of all the URLs contained on CodeCamp? Read this blog post till the end and you'll get the answer!)

(Wondering where I found a list of all the URLs contained on CodeCamp? Read this blog post till the end and you'll get the answer!)

When launching this bulk URL tester, you need to keep in mind that

www.site.com/page

and

www.site.com/page/

are two different URLs!

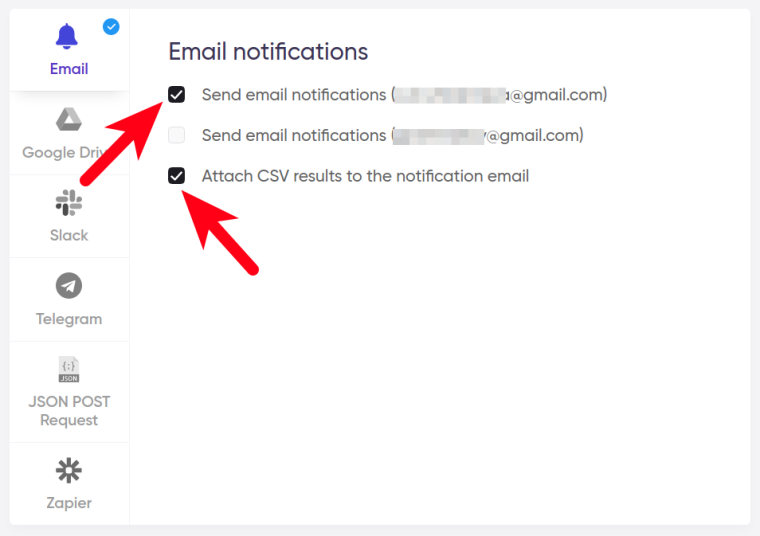

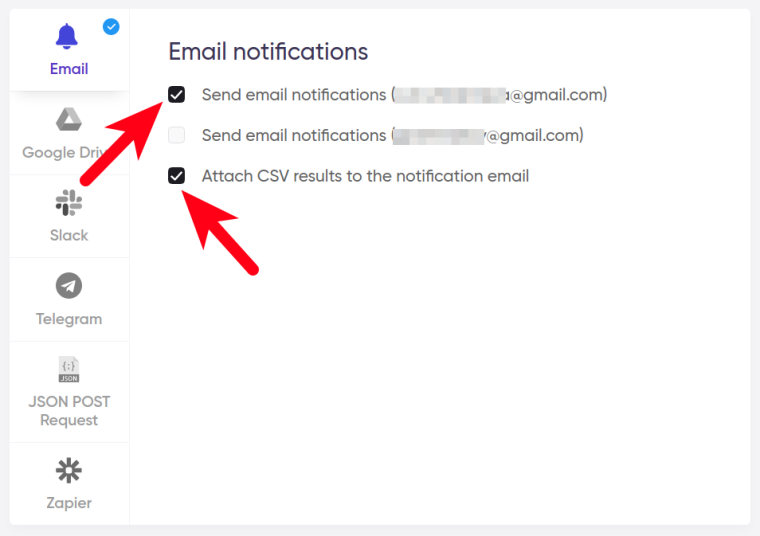

5. Select notifications.

Specify if you would like the URL status checker to notify you once the job is done. You can also opt for attaching a .csv file with the results to your email.

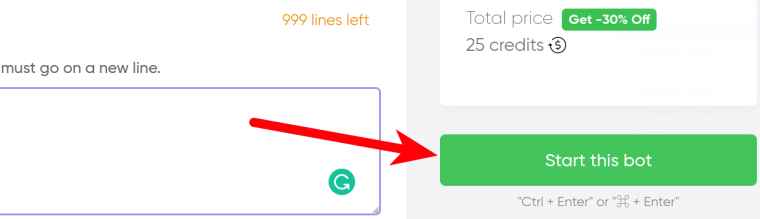

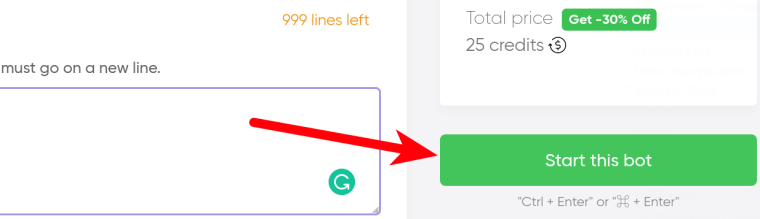

6. Start the bot!

6. Start the bot!

Click on the green "Start this bot" button on your right.

I’ve also prepared a comprehensive video tutorial on how to perform the URL check online using this bot. You can watch it here:

I’ve also prepared a comprehensive video tutorial on how to perform the URL check online using this bot. You can watch it here:

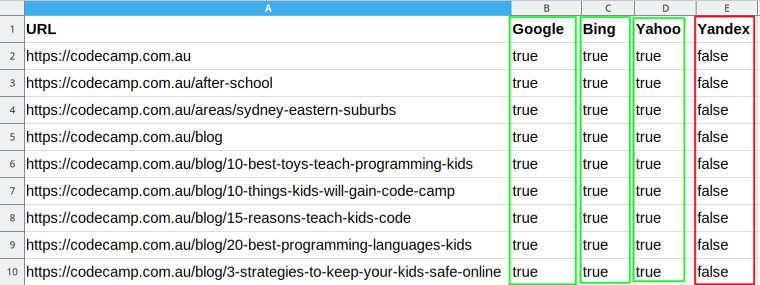

Studying the web URL check results

After you've launched the Google page checker, you need to give the API a bit of time. After the URL index checker has successfully completed its job, you will be able to view the end result:

Translating this table is dead simple:

Translating this table is dead simple:

- true means that the exact URL was found in the index.

- false means that the exact URL was not found in the index.

As you can see from this example, my URLs aren't indexed by Yandex. CodeCamp is an Australian website, while Yandex is a Russian search engine. The reason for CodeCamp pages not being indexed by Yandex may be that Australian SEO specialists hardly ever optimize their websites for Russian search engines.

URL indexing tool: pricing

Since I am using a paid API for these lookups, I had to put a price tag on each request. Fortunately, one URL call will only cost you as low as $0.004, which is extremely low. Would you rather google URL index of 100 URLs manually or pay ¢40 to automate that? 🤔 To me, that's not even a question! With the Google index checker, bulk extraction becomes fast and easy!

Try the bulk URL indexer!

I encourage you to turn indexation checks into a habit and do it regularly, especially when creating SEO audits. Incorrect indexation shows a lot of problems that you can tackle right away. The importance of checking URL presence can't be overestimated! The more so if you're now aware of how to use this smart site checker, Google tools an other SEO bots.

You can try the bulk URL indexer here, and if you're a new user you get free credits on sign-up! Also, check other Google scraping tools.

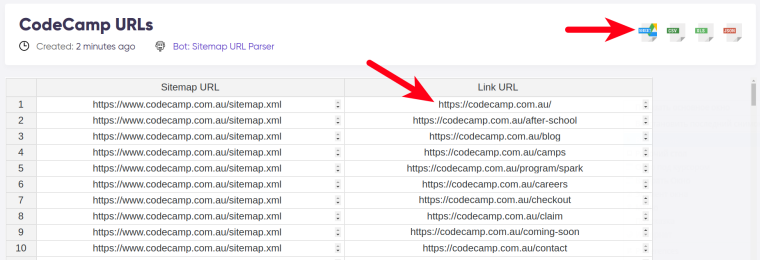

And one more important note: If you are asking yourself “how to find all URLs of a website fast”, Botster has a ready-made decision for you, too. In the above paragraphs I promised you to reveal where I found a list of all the URLs contained on CodeCamp. Extracting all the links of CodeCamp as well as those of any other website comes easy with the smart Sitemap URL Parser by Botster:

Here is a short guide on how you can download sitemap XML in no time:

Here is a short guide on how you can download sitemap XML in no time:

Sitemap URL Parser

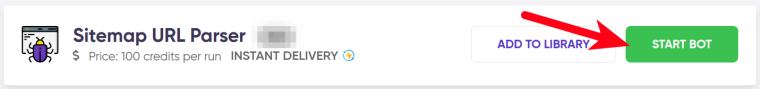

1. Open the Sitemap URL Parser’s start page.

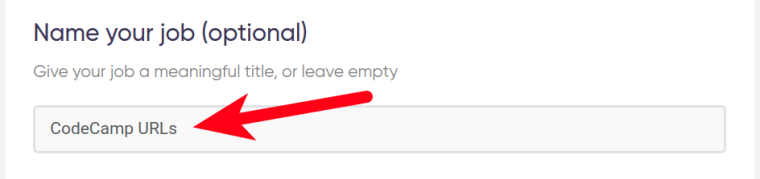

2. Give your job a title.

2. Give your job a title.

In my case, it will be “CodeCamp URLs”.

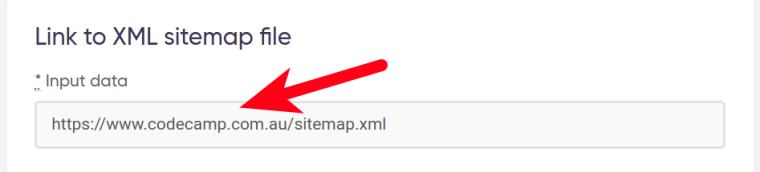

3. Paste the URL leading to the XML sitemap file.

3. Paste the URL leading to the XML sitemap file.

I pasted https://www.codecamp.com.au/sitemap.xml.

4. Select notifications.

4. Select notifications.

Specify if you would like the sitemap extractor to send you a notification once the job is done and if you would like to have a .csv file attached to your email.

5. Launch the bot.

5. Launch the bot.

Click on the green button on your right.

If you are more into video watching than reading manuals, here is my video guide for the Sitemap URL Parser:

If you are more into video watching than reading manuals, here is my video guide for the Sitemap URL Parser:

As a result, I’ve received as many as 118 URLs ready for pasting into a special field in the URL indexation checker! So I did, and now let’s take a look at what I’ve got as a result:

These are exactly the links that I pasted to the Google Index Checker, as described above. Having two bots doing the routine job instead of you is nice, isn’t it?

These are exactly the links that I pasted to the Google Index Checker, as described above. Having two bots doing the routine job instead of you is nice, isn’t it?

Enjoy!